Microservices with Azure Kubernetes and Docker

Abstract

This blog will provide an understanding of microservices architecture followed by data issues that arise when working with microservices. It will then provide an overview of the demo microservices application, which will be configured step by step. The demo application will use Docker images to host microservices inside the Azure Kubernetes service. Finally, how to utilize the power of Kubernetes will be discussed.

Introduction

Microservices have become increasingly popular and for good reason. In this blog post, you will learn how to create a microservices architecture application using the following technologies:

Prerequisites

You need to have a basic knowledge of working with Azure services, .Net Core and SQL databases and

also have Docker installed on your machine.

Before we dive into the implementation, let’s have a look at what a microservice is.

Microservices Architecture

Microservices architecture lets you have an application distributed across services independent of each other. Following are some important attributes of a microservice:

High availability — Every microservice should return a response for every request within an acceptable amount of time

Partition tolerance — When an instance of a microservice goes down, it shouldn’t affect the system. It should guarantee the consistency of the microservice’s availability.

Eventual consistency — Whenever a change happens to the data in a single microservice, that change should be propagated to other relevant microservices, eventually

Asynchronous communication — Microservices should not communicate with each other directly. Instead, it should use a message broker to handle the communication for them.

Microservices and Data

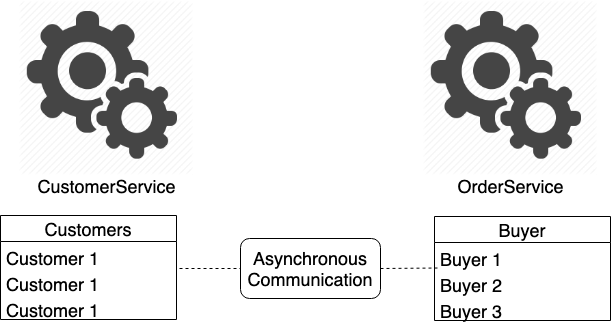

This is where most of the issues arise in microservices. Let’s take an example. Imagine an

OrderServiceneeds buyer details(Who are customers in CustomerService) fromCustomerService . In order

to get data, if you make a synchronous call to the CustomerService from OrderService, OrderService

will be depending on the CustomerService for data.

If the CustomerService goes down, OrderServicewill

not be able to carry out an order.

The solution to this is to replicate the data required for the OrderServicein its own database so

it is not required to make a service request to get the data. But, this introduces another problem.

What happens when the CustomerService modifies

some customer data ? That would make the buyer data

obsolete in OrderService. This is called the system data being in an inconsistent state.

The solution to this is asynchronous communication. The CustomerService should notify the

OrderService of the data change via asynchronous

communication so the OrderService can update its

data. Please refer Figure 01.

Sample Application

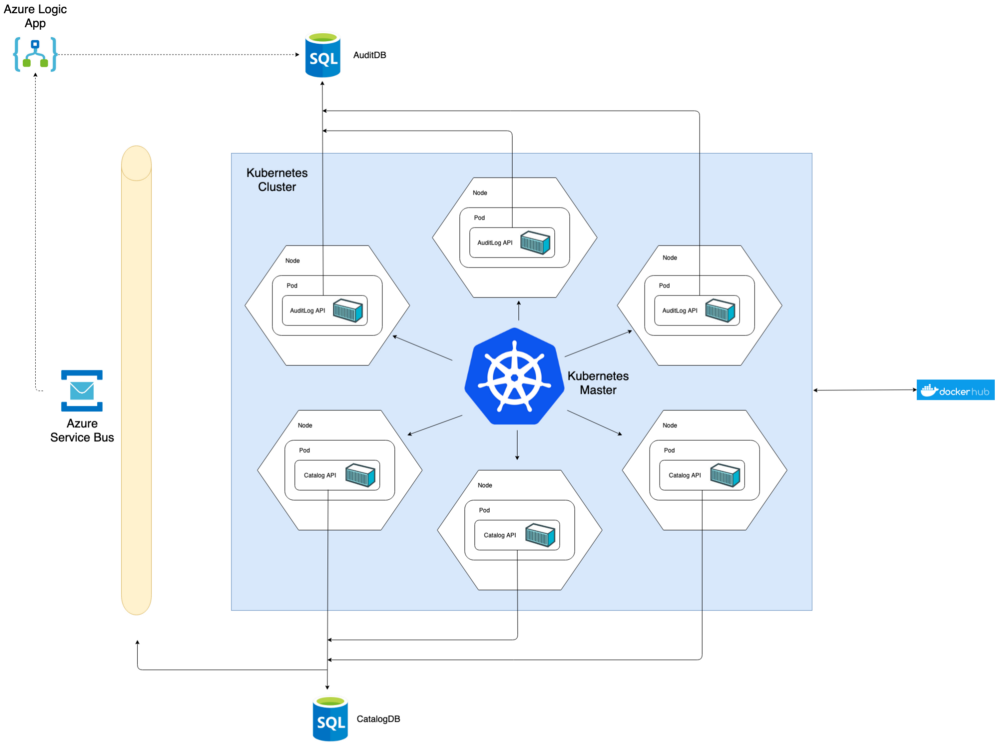

The application we’re going to create consists of two .Net Core Web API services called AuditAPI and CatalogAPI. Each service has its own SQL database hosted in Azure.

Both services are Dockerized and are hosted in a DockerHub public repository and are deployed into a

Kubernetes cluster hosted in Azure. The asynchronous communication is handled by Azure Service Bus and

Logic Apps. Please refer Figure 02.

Both the CatalogAPI and AuditAPI provide basic CRUD operations for Products and AuditLogsentities respectively. Furthermore,

whenever a new Product is added via CatalogAPI, an

AuditLog should be inserted into the AuditLogs Table. This is handled via asynchronous

communication.

Now let’s start the implementation.

1. Getting the APIs Working

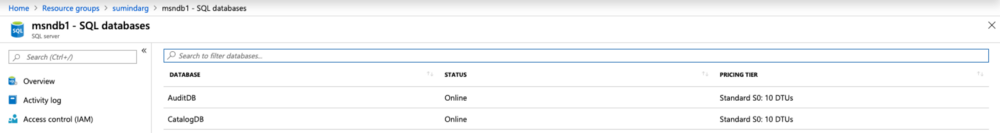

Go into the Azure portal and create SQL databases. We need two SQL databases called AuditDB and

CatalogDB. Refer Figure 03.

Clone my code from this Repo and open the Catalog solution. Navigate to Properties ->

LaunchSettings.json file and insert values DB_SERVER, DB_USER and DB_PASSWORD of the CatalogDB

database you just created. Now run the API and you should be redirected to

http://localhost:5001/api/version endpoint. To make sure database migrations worked, go to

http://localhost:5001/api/products and an empty result should return.

Now open the Audit solution. Navigate to Properties -> LaunchSettings.json file and insert values

DB_SERVER, DB_USER and DB_PASSWORD of the AuditDB database you created. Now run the API and you should

be redirected to http://localhost:5000/api/version endpoint. To make sure the database migrations

worked, go to http://localhost:5000/api/auditlogs and an empty result should return.

Now in CatalogDB and AuditDB databases, there should be Products and AuditLogs tables created

respectively from .Net migrations.

2. Creating an Asynchronous Messaging Back-end in Azure

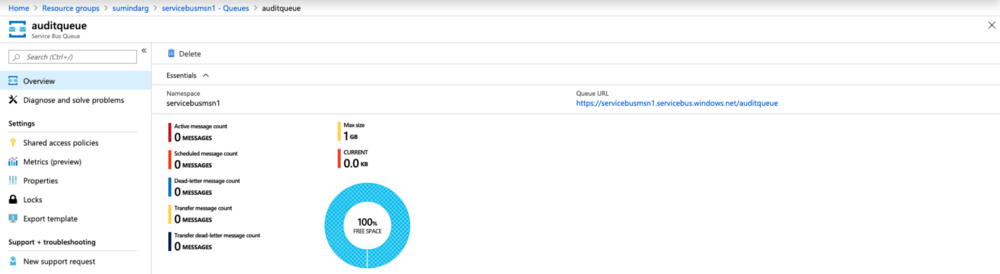

Create an Azure service bus. The basic pricing tier is enough as we are only using a Queue. Then

create a Queue called auditqueue. Refer Figure 04. Whenever a new Product is added, a queue message

with new Product details will be inserted into the queue.

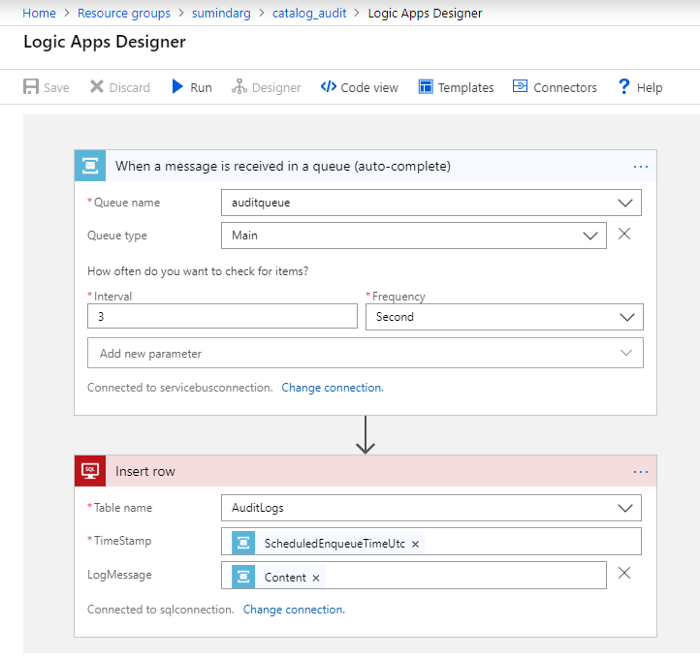

Now create an Azure Logic App to react to the Service Bus queue messages. The logic app will have a

trigger When a message is received in a queue and it will have an SQL server action Insert Row. Refer

Figure 05.

Please note that the content of the queue message will be inserted to the LogMessage column of the

AuditLogs table.

3. Verifying the Asynchronous Messaging is Working

Open the Catalog solution and navigate to the file Properties -> LaunchSettings.json and add your

Azure service bus connection string value to the property SB_CONNECTION.

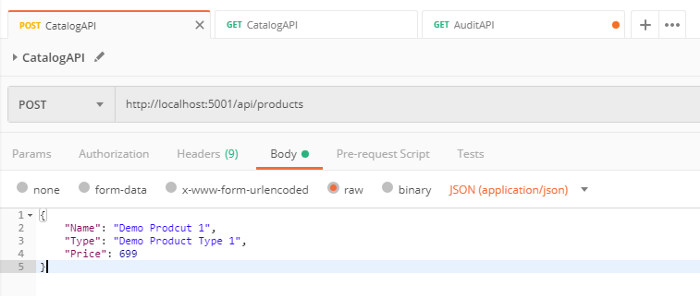

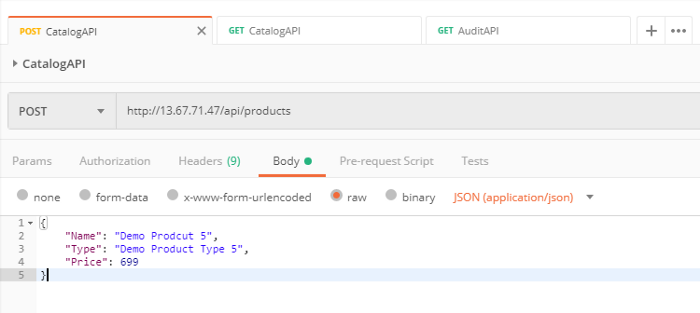

Now run both the Catalog and Audit solutions and insert a new Product by sending a POST request to

the the endpoint,

http://localhost:5001/api/products

Use a tool of your preference. Figure 06 shows the request using Postman.

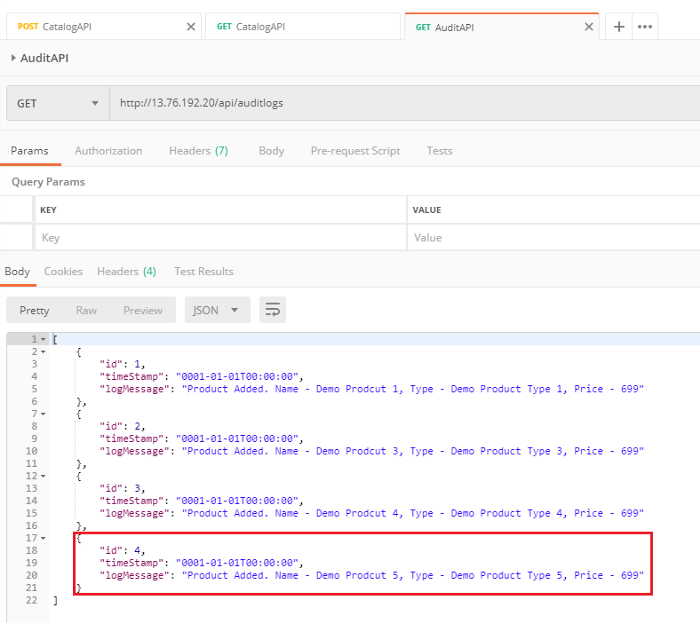

Now go to the endpoint:

http://localhost:5000/api/auditlogs

You should be able to see that a new AuditLog message has been inserted for the Product we just added.

This was achieved using asynchronous communication using Azure service bus and logic app.

4. Dockerizing the APIs

Now that we have our APIs working, let’s Dockerize them!

Prerequisites

1. You need to have a DockerHub repository

2. Docker must be installed and logged in

In each Catalog and Audit project, I have included a Dockerfile which we will build to get a Docker

image for each API.

Following is the Dockerfile for CatalogAPI.

Following is the Dockerfile for AuditAPI.

Let’s build the catalogapiimage first.

1. Open Powershell or CMD and navigate in CLI to the following directory.

Docker-Kubernetes-ASB-Microservices\Catalog

2. Run this command.

docker build -f .\CatalogAPI\Dockerfile -t catalogapi:local .

Let’s build the auditapi image.

1. Open Powershell or CMD and navigate in CLI to the following directory.

Docker-Kubernetes-ASB-Microservices\Audit

2. Run this command.

docker build -f .\Audit\Dockerfile -t auditapi:local .

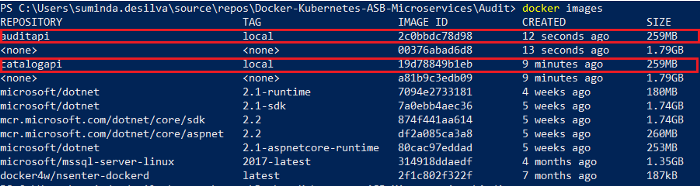

Verify that images are built successfully using the following command:

docker images

Refer Figure 07.

In order to upload both images to DockerHub, run these commands to tag and upload the

catalogapi and

auditapi images to your DockerHub.

docker tag catalogapi:local /catalogapi:v1

docker push /catalogapi:v1

docker tag auditapi:local /auditapi:v1

docker push /auditapi:v1 Verify that the images were successfully uploaded to your repository.

5. Deploying API Docker Images in Azure Kubernetes Cluster

Prerequisites

1. A Kubernetes Service must be created in the Azure portal.

2. It must be connected to the Kubernetes Service in Powershell or CMD.

Once you have the above prerequisites, open the Kubernetes manifest file in the path

Docker-Kubernetes-ASB-Microservices\Kubernetes\deployment.yaml and edit the following

properties with your details.

<-Base 64 Encoded Service Bus Connection String->

<-Your DockerHub Catalog API Image->:<-Tag->

<-Your DockerHub Audit API Image->:<-Tag->

<-DB Server->

<-DB Username->

<-DB Password->

Refer the sample Kubernetes manifest file deployment-example.yaml in the same directory.

1. Open Powershell or CMD and navigate in CLI to the following directory.

Docker-Kubernetes-ASB-Microservices\Kubernetes

2. Run the following command.

kubectl apply -f deployment.yaml

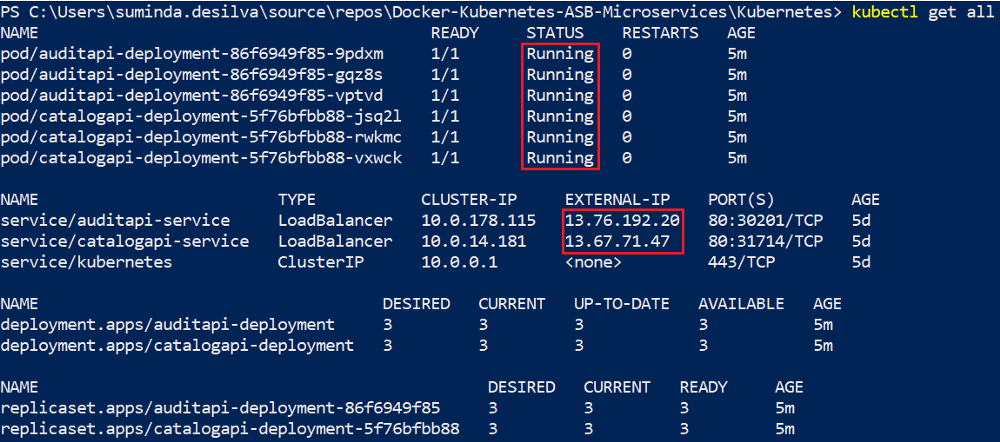

3. Run command:

kubectl get all

and make sure all the pods are in running state. Wait until external IPs are allocated to API

services.

Refer Figure 08. Note the external IP addresses for catalogapi-service and auditapi-service. Now we

can do an end to end testing like we did on step 3, but in this case for these Kubernetes hosted API

services instead of the locally hosted APIs.

6. Verifying Kubernetes Cluster Services are Working

Insert a new Product by sending a POST request to the the endpoint,

http://<-catalogapi-service external IP->/api/products

Figure 09 shows the request using Postman.

Now go to the endpoint:

http://<-auditapi-service external IP->/api/auditlogs

You should be able to see that a new AuditLog message has been inserted for the Product we just added.

Utilizing The Power of Kubernetes

Now that we have a fully working Kubernetes cluster with two API services, we can fully utilize all the features provided by Kubernetes. To name a few:

Following are some useful commands to manage the cluster.

To change the number of replicas in a deployment

kubectl scale deployment/<-deployment name-> --replicas=10

To change the pod image in a deployment (this is used for upgrades)

kubectl set image deployment/<-deployment name>

To check the roll-out history

kubectl rollout history deployment/<-deployment name>

To undo a deployment

kubectl rollout undo deployment/<-deployment name>

Link to my Github Repo

https://github.com/SumindaD/Docker-Kubernetes-ASB-Microservices

References

Kubernetes Documentation

Kubernetes is an open source container orchestration engine for automating deployment, scaling, and management of…

Docker Documentation

Get started with Docker Try our new multi-part walkthrough that covers writing your first app, data storage…

Azure Service Bus messaging overview

Microsoft Azure Service Bus is a fully managed enterprise integration message broker...

Suminda De Silva

Senior Software EngineerDeveloping a Custom Audit Trail and a Notification Service for a Workflow Based Serverless Application on AWS

READ ARTICLE

Human Emotions Recognition through Facial Expressions and Sentiment Analysis for Emotionally Aware Deep Learning Models

READ ARTICLE