Increasing Client Satisfaction via Minimal Defect Leakage

We all love to build a software and test it within a comfortable timeline similar to what’s prescribed in certain old-fashioned software development methodologies like the waterfall method, but the reality of today’s world is unfortunately not always like that. Most development projects today require a fast-paced development approach coupled with shorter release cycles, but with the intention of not compromising software quality.

So, no matter how competent you are in testing or how much effort you put into testing your product within the agreed timelines/budget, it will prove in vain if the Client starts uncovering more defects than the SLAs during their UAT cycle. Now the question is how can we bring this down in order to maintain defect leakage SLA at 5% or less? Thankfully, there are some best practices that can be adopted to facilitate this.

- Getting involved in requirement discussions/workshops

- Understanding requirements thoroughly

Clear out the test boundary

Understanding who is doing what, what should be the test environments/devices, the test scope you are responsible for, types of tests to be carried out are some important aspects that need to be addressed in any form of software test. As an example, the application you are testing may not always work independently because there could be external systems that want to communicate with it. Also, there could be non-functional requirements that your Client keeps in their head but gets uncovered at the time they start the UAT.

Needless to say, it is paramount that these variables are identified and responsibilities are known, from the very onset. Therefore, clearing your test boundary is a task that needs to take place at the planning stage to avoid any surprises at UAT.

Knowing what the Client is going to test as part of their UAT

Getting an understanding of what your Client is planning to verify as part of their UAT is advantageous. They will be the ones to analyze all business-critical flows/features that require minimum risk of being broken in the production environment. Hence, getting an understanding on this should be planned from the test designing phase. This is sometimes quite challenging because UAT testers are not always easily reachable if the project is large and if there are multiple UAT testers across different geo locations.

Therefore, one strategic approach you can take is to share test scenarios with some of the key UAT testers during your test designing stage to validate whether you missed any important business-critical scenarios. Also, if your UAT Team is planning to come up with any UAT test plans/test cases, try to get this done from them in advance so you can plan to have a proper test coverage prior to your UAT release.

Always try to use real/production like data and not dummy data

Put yourself in a real business user’s shoes and not just as a tester

As normal protocol goes, what we basically do is start executing test cases we write to find bugs, but have you ever thought how a real user would actually use the product in the real world? For instance, the real users might not want to execute the process workflow till the end, and instead they may want to save completed steps and resume it at a later time. So, you may not encounter any problem when you perform your test without a break, but problems might occur when you perform the same action with periodic breaks in the workflow.

Identifying who the real users would be and acquiring knowledge on the real business domain and how it operates would immensely help overcome such challenges and provide confidence among the test you do as well.

Continuous Regression Testing

Regression testing should be performed ideally on a regular basis, such as at the end of each day, weekly, bi-weekly, at the end of functional tests, and so forth, based on the development model being followed. Even if we perform a thorough functional testing, the system could be error-prone with the changes incurred during the development lifecycle and this defect injection rate is quite high if the Development Team is on a tight schedule and working in a pressurized environment.

Performing more regression could reveal more defects, which could help minimize production defects significantly. Continuous maintenance of your regression suite is of utmost importance and therefore revisiting the regression test cases at the end of each sprint/release is also an important task to be followed throughout the project.

Learn from the past

As humans, we always tend to do mistakes and this could be as a result of lack of work experience, lack of domain knowledge, not being productive, lack of proper reviews, not having proper communication among project stakeholders, and so on. Therefore, at the end of every sprint/release, looking back in retrospect at areas where there were mistakes, and identifying areas of improvement is very much needed.

Completing a thorough review on UAT defects also must take place in order to see what went wrong and why it went wrong so that such defect leakages can be avoided in the future.

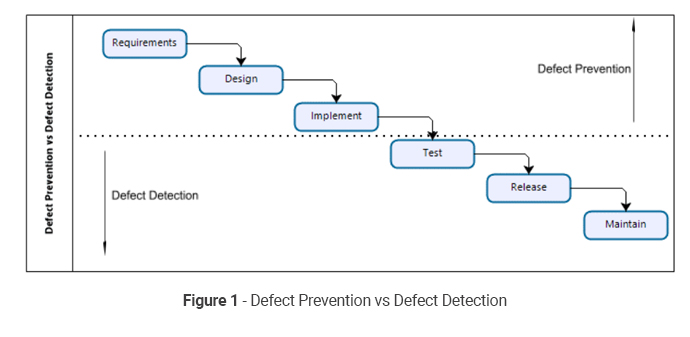

Shift Left Testing is a popular jargon we hear in today’s agile development world. The focus here is to involve QA Engineers right from the initial stages of the program to prevent defects rather than having to put more effort in defect detection later on.

One important aspect of that is that the QA Engineer also becomes a critical contributor towards finalizing the requirements by identifying any requirement defects/anomalies at the beginning (defect prevention). They can draw on their past experiences and put forward suggestions if the requirements are deviating from its boundaries.

As testing professionals, we always must be proficient in correctly understanding the domain and the application requirements. This is essential because the QA Team cannot rely on the Development Team for requirements. Lack of clarity in software requirements is one of the biggest barriers for effective software development and hence clear understanding on application requirements via proper requirement analysis, studies on previous systems with similar domains, better collaboration with Client partners are some of the key action items we have to consider in order to fulfill this.

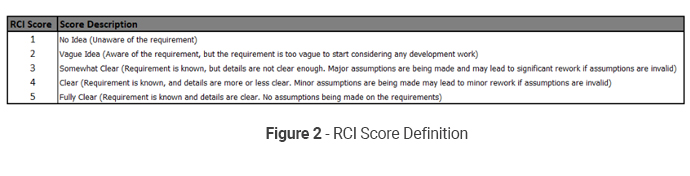

QA Teams should act as advocates to developers in terms of requirements and support the Client in any requirement refinements, whenever necessary. Requirement Clarity Index (AKA RCI) is a key metric that can be used to track the level of clarity each team member has on application requirements.

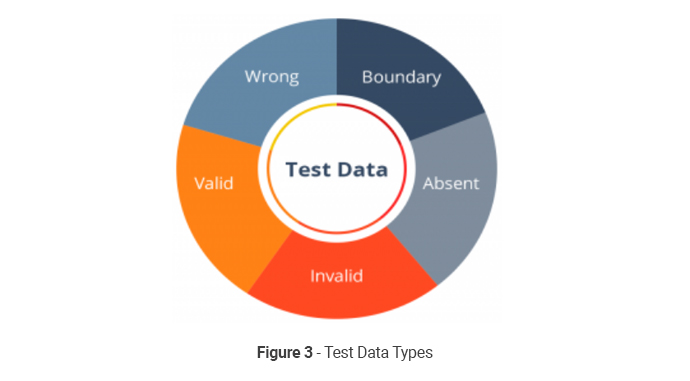

We have to use different types of test data during our tests such as valid, invalid, wrong, boundary, etc. for a successful test execution. But to make your testing a realistic one, test data plays a major role, especially on valid data pertaining to happy path testing. Of all the test data demonstrated in Figure 3, valid data is important as it is combined with the application requirements.

Real data will show you certain major characteristics of data, such as the length of them, types of characters they use, how much of volume, etc. Therefore, try to get a data dump to your test environment from the production environment with necessary data masking to make your testing a realistic one (this is possible if it is a migration or a maintenance project). If this is something you cannot do, try to at least get some sample production data through your Client project stakeholders/UAT Team prior to kicking off test execution.

Conclusion

Our primary goal should always be to get the maximum benefit from conducting a test and to increase client satisfaction by delivering a quality product. Achieving this is not rocket science and if we grasp the requirements clearly, set the test boundary, know the user and how the UAT will be performed, use real/production-like data as much as possible, think from a business user’s perspective, do continuous regression, and look back at the past to adjust the things that went wrong, it could help achieve this milestone in a fairly easy manner.

Lalindra Weliwita

Quality Assurance ManagerDeveloping a Custom Audit Trail and a Notification Service for a Workflow Based Serverless Application on AWS

READ ARTICLE

Human Emotions Recognition through Facial Expressions and Sentiment Analysis for Emotionally Aware Deep Learning Models

READ ARTICLE