Firehose will ask you if you want to do any transformation on our log stream and for the sake of simplicity of this tutorial we won’t be using it, so select disable and hit next.

AWS Kinesis Firehose – Real-time data streaming on AWS

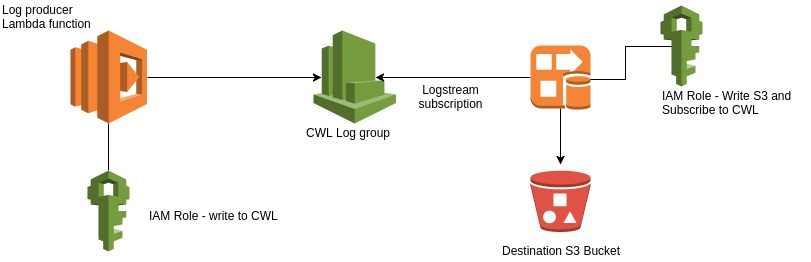

AWS Kinesis Firehose is a fully managed service for transforming and delivering streaming data to a given destination. A Destination can be a S3 bucket, Redshift cluster, Splunk or Elasticsearch Service. In the following tutorial I’ll walk you through the process of streaming CloudWatch Logs to a S3 bucket generated by an AWS Lambda function.

Steps

Create Lambda function to write some logs

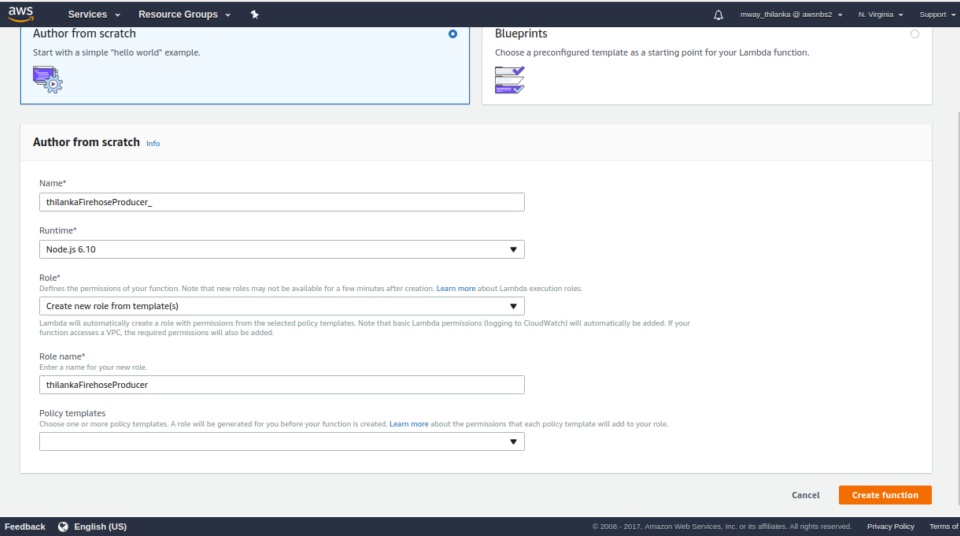

This Lambda function will simply write a console log in plain javascript. We will call this Lambda function “thilankaFirehoseProducer” and create a new IAM Role as seen below. Hit create function.

This Lambda function by default will have CloudWatch Logs access where it can create log streams and put logs in it. We’ll write a simple log statement in our code as below.

exports.handler = (event, context, callback) => {

// TODO implement

let dt = new Date();

console.log('[THILANKA_LOG] log triggered from thilankaFirehoseProducer: '+dt);

callback(null, 'Hello from Lambda');

};

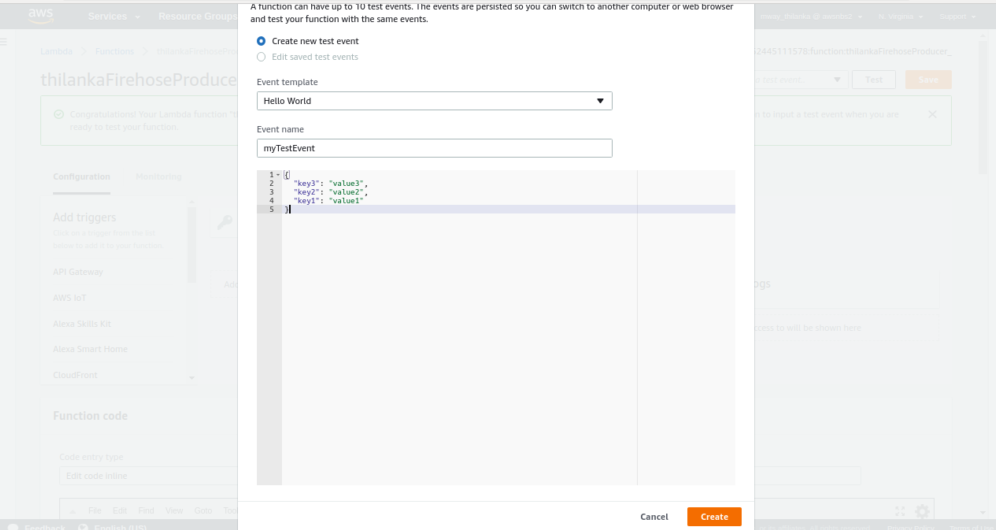

Now we need to verify whether our logs are created in CloudWatch Logs upon execution of our Lambda function. To execute our Lambda function we need to create a test event. Select configure test event from the dropdown near the test button and configure it as below.

Once you hit create and save our Lambda code, you can hit the Test button to execute our code. Once the code is executed you can watch the logs in CloudWatch by clicking the ‘logs’ links. If you get an error similar to “No log stream found”, make sure you’re in the same region as the Lambda function. If everything went well, you can go to the log group link and view the logs.

Now our Lambda function is working and writing logs to CloudWatch Logs. We can go ahead and create a Firehose Delivery Stream to pump those log files into an S3 bucket.

Create Firehose Delivery Stream

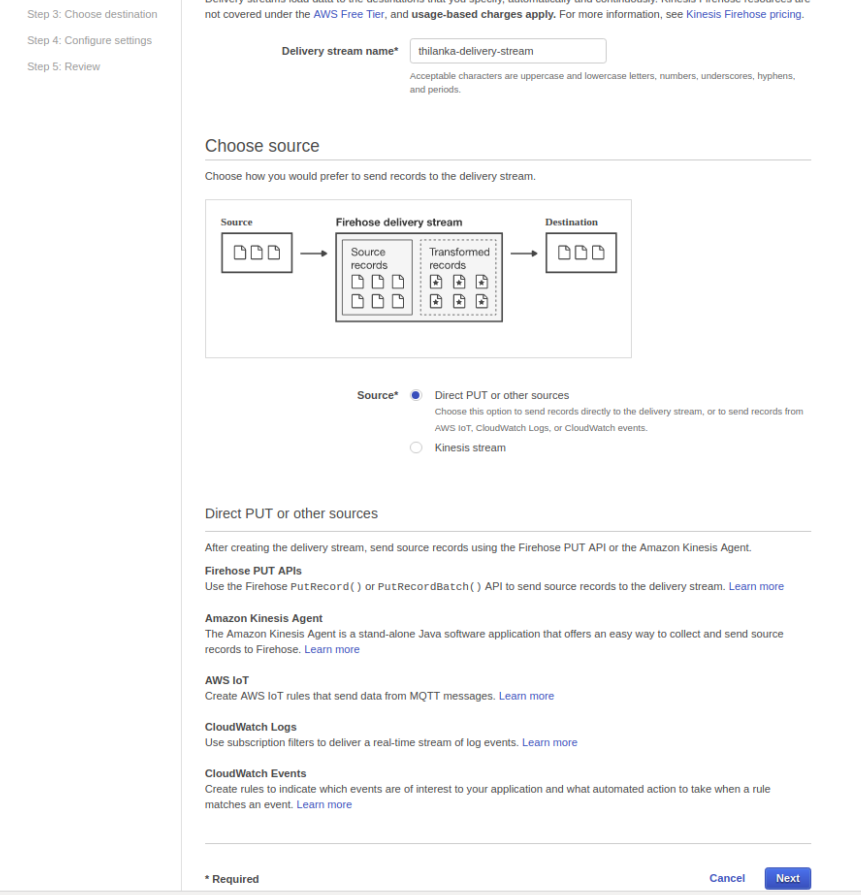

Go to Kinesis delivery stream in AWS console and hit Create delivery stream button. Specify the delivery stream name as “thilanka-delivery-stream” and choose the source as direct PUT and hit next.

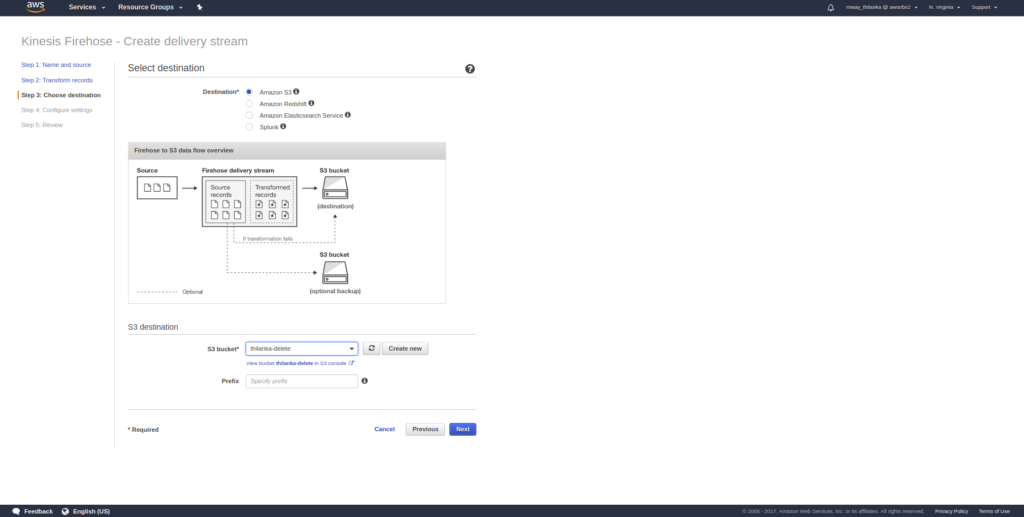

Once you have configured the source, Firehose needs a destination so that the data in the stream can be put somewhere. We will select the S3 bucket as our destination and hit next. It is assumed that you already have an S3 bucket created for this purpose.

This configuration page will set the data buffer and intervals. We will choose the smallest buffer interval (60 seconds) so that Firehose will send each record/data to its destination when the time has elapsed. In the IAM Role section, click create new IAM Role. This role will contain the necessary permissions to write to the specified S3 bucket. Review the configuration and hit finish.

To perform this you need to install AWS CLI. Once the CLI is installed and private keys have been set you can start using it. Create the file TrustPolicyForCWL.json where you can specify the IAM statement as follows:

{

"Statement": {

"Effect": "Allow",

"Principal": { "Service": "logs.us-east-1.amazonaws.com" },

"Action": "sts:AssumeRole"

}

}

Now run the following command to create a role specifying the assume role policy document we have just created.

aws iam create-role --role-name thilankaCWLFirehosePutData --assume-role-policy-document file://TrustPolicyForCWL.json

If the role creation is successful you will receive the following output. Take note of the highlighted ARN of the role.

OUTPUT

======

{

"Role": {

"AssumeRolePolicyDocument": {

"Statement": {

"Action": "sts:AssumeRole",

"Effect": "Allow",

"Principal": {

"Service": "logs.us-east-1.amazonaws.com"

}

}

},

"RoleId": "AROAJAPAJ2EQY6RGHVU66",

"CreateDate": "2017-12-14T06:43:45.079Z",

"RoleName": "thilankaCWLFirehosePutData",

"Path": "/",

"Arn": "arn:aws:iam::052445111578:role/thilankaCWLFirehosePutData"

}

}

Now we need to get the stream ARN so that we can create the permission policy to the stream and attach it to the newly created role as follows:

aws firehose describe-delivery-stream –delivery-stream-name thilanka-delivery-stream

OUTPUT

======

{

"DeliveryStreamDescription": {

"DeliveryStreamType": "DirectPut",

"HasMoreDestinations": false,

"LastUpdateTimestamp": 1513078570.058,

"VersionId": "2",

"CreateTimestamp": 1513076387.847,

"DeliveryStreamARN": "arn:aws:firehose:us-east-1:052445111578:deliverystream/thilanka-delivery-stream",

Create the following PermissionsForCWL.json containing the policy permissions.

{

"Statement":[

{

"Effect":"Allow",

"Action":["firehose:*"],

"Resource":["arn:aws:firehose:us-east-1:052445111578:*"]

},

{

"Effect":"Allow",

"Action":["iam:PassRole"],

"Resource":["arn:aws:iam::052445111578:role/thilankaCWLFirehosePutData"]

}

]

}

Run the AWS CLI command to attach the permission to role:

aws iam put-role-policy --role-name thilankaCWLFirehosePutData --policy-name Permissions-Policy-For-CWL --policy-document file://PermissionsForCWL.json

Now that we have the IAM Role with the necessary permission policies we will now create a subscription filter in CloudWatch Logs so that our Kinesis Delivery Stream can listen to log events as follows:

aws logs put-subscription-filter --log-group-name "/aws/lambda/thilankaFirehoseProducer" --filter-name "Destination" --filter-pattern "thilankaFirehoseProducer" --destination-arn "arn:aws:firehose:us-east-1:052445111578:deliverystream/thilanka-delivery-stream" --role-arn "arn:aws:iam::052445111578:role/thilankaCWLFirehosePutData"

Test executing Lambda function to verify logs in S3

Finally we can test our delivery stream setup by manually executing our lambda function to verify whether our log files are streamed to S3 bucket. Check your destination bucket for logs.

Thilanka Liyanarachchi

Senior Software EngineerDeveloping a Custom Audit Trail and a Notification Service for a Workflow Based Serverless Application on AWS

READ ARTICLE

Human Emotions Recognition through Facial Expressions and Sentiment Analysis for Emotionally Aware Deep Learning Models

READ ARTICLE