Basics of K-Means Clustering

Introduction to Machine-Learning:

Machine Learning is considered as the execution of utilizing the existing algorithms, in order to inject data, grasp from it, and then make a resolution or forecast about something. So rather than developing software procedures with a certain set of directives to achieve a specific task, the machine is instructed using huge amounts of data and algorithms that provides it the capability to absorb, how to accomplish the endeavour.

As an example, one type of algorithm is a classification algorithm. This basically injects data into different clusters or rather segments. The classification algorithm is generally used to distinguish handwritten alphabets and also could be utilized to categorize emails into spam and not-spam.

Why do we need Machine Learning:

Identifying patterns in a given data-set could only be possible by human brains. When the data-set being

immense, the time consumption to evaluate the entire dataset increases. This is where Machine Learning

comes into play to reduce the time consumption, when the given data-set is large.

In machine learning, tasks are generally classified into broad categories. These categories are based

on how learning is received or how feedback on the learning is given to the system developed.

Machine Learning is typically divided into two major categories:

Unsupervised

This is used to outline conclusions from data-sets, which contains of input data without labeled responses. It can be notified that, in machine learning it’s differentiated from the supervised algorithm, where the learner is only disposed with some unlabeled samples. The most common unsupervised machine learning approach is cluster analysis, which is utilized for experimental data analysis to discover hidden patterns or grouping, in a given data-set. The appropriate time to utilize the unsupervised algorithm in machine learning, to the greatest extent is when there’s no data on required outcomes, like discovering a target market for a completely new product that, a business has never sold before.

Supervised

A technique in machine learning, where a machine utilizes a cluster of training samples to pursue, to precisely accomplish a task. In supervised learning, we do have access to samples of faultless input-output pairs that we can show to the machine during the training phase. The example of handwriting recognition is generally considered as a supervised learning task. We’ll be injecting the algorithm, a bunch of images of handwritten digits alongside with the accurate labels for those digits, and the algorithm typically absorbs the patterns that relate images to their labels. The it will grasp the correlation among the images and their interconnected numerals, and execute that learned correlation to categorize entirely new images that the algorithm hasn’t seen before.

Supervised Learning is divided into 2 types: Few examples:

Classification: This is where the forecast is based on categorical response values where the data can be

divided into certain classifications. The labels normally appears to be in categorical form and symbolizes

a finite number of classes. Few examples could be:

1. Given a set of input features, predict whether a Breast Cancer is Benign or Malignant. 2. Given an

image correctly, classify as containing Birds or Fishes. 3. From a given email, predict whether it’s a

spam or not.

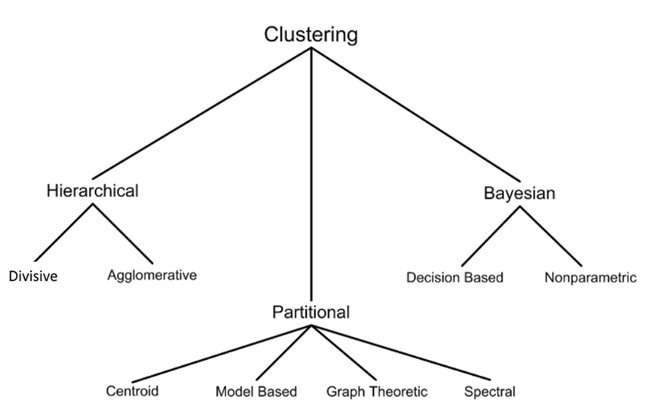

What is clustering in machine learning?

Clustering algorithms are predominantly utilized in an unsupervised way. They are proposed with a bunch of

data instances that must be classified corresponding to some notion of similarity. Basically, clustering

is an approach of gathering homogeneous entities together. The end objective of this unsupervised learning

technique, is to identify similarities in the data point and group related data points together.

The sole intent of clustering is to decide the internal grouping in a bunch of unlabeled data. But how

to determine what establishes a good clustering? It can be shown that there is no definite best criterion

which would be unbiased of the final aim of the clustering.

But for this post, let’s stick to the most popular and widely used algorithm K-Means.

What is K-Means clustering?

Clustering is generally seen as an unsupervised learning method for data analysis. Nevertheless, in few cases,

information about the problem domain is available in addition to the data instances themselves.

K-means clustering is mainly utilized, when you have unlabeled data (i.e., data without defined categories

or groups). The purpose of this unsupervised machine learning algorithm is to choose clusters or rather

groups ,in a given data set, with the number of groups indicated by the variable K. This works repeatedly,

in order to assign each and every data point to one of the K cluster, based on the features that are given.

The data points are generally grouped based on the feature similarity. The end results of the K-means clustering

algorithm would be:

1. Centroids of the number of clusters, which were identified (denoted as K).

2. Labels for the training data.

How it works?

It is known that the seeding procedure utilized throughout the clustering, can remarkably transform the model.

Here, the seeding is being considered as the primary position of data points into possible centroids.

As an example, if the given data-set includes a number of outliers, and an outlier is chosen to seed the

clusters, none of the other data points would fit well with that cluster, and the cluster could be a singleton.

In other words, a cluster with only one data point. Selecting the random initial centroids badly will take

longer to intersect which may result in poor clustering. There are few solutions to overcome this:

Some use-cases on which this algorithm has been applied?

References & Further Readings:

Kulasangar Gowrisangar

AuthorDeveloping a Custom Audit Trail and a Notification Service for a Workflow Based Serverless Application on AWS

READ ARTICLE

Human Emotions Recognition through Facial Expressions and Sentiment Analysis for Emotionally Aware Deep Learning Models

READ ARTICLE