Developing an authentication service using face recognition

In modern enterprise application development, authentication is a key deciding factor. We can use various authentication mechanisms to authenticate ourselves with enterprise back ends. Some of the popular authentication mechanisms currently being implemented are Single-Factor Authentication, Two-Factor Authentication (TFA), Single Sign On (SSO), Multi-Factor authentication (MFA), etc. In addition to these key methods, nowadays, we could see face recognition is also heavily used for authentication purposes.

In this blog post, I will be focusing on how we can use face recognition as a way of authenticating an ReactJS based web application along with TensorFlow, which is hosted on AWS.

TensorFlow

TensorFlow is a library which provides machine learning modules for javascript. We can run these modules in the browsers or with Node.js

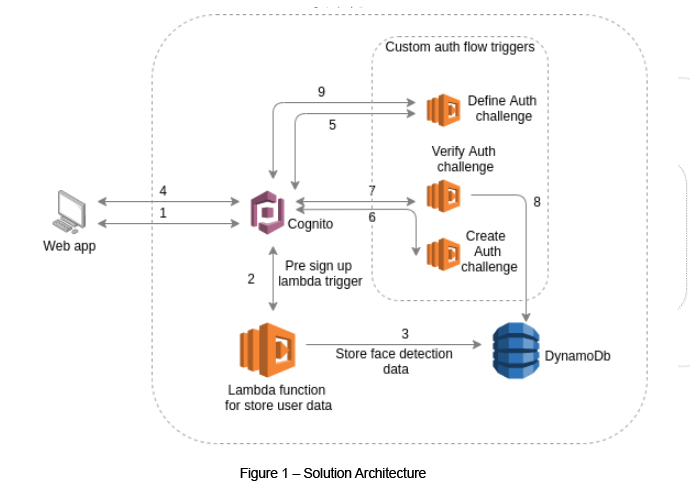

The Solution Architecture

Step 1 - The user sign up process (See Step 2 and 3).

Step 2 and 3 - We use AWS Cognito as the authentication service but we cannot use face detection data to authenticate with Cognito as of yet. Hence, in order to store face detection data, we can use a Lambda function, which can be triggered via a pre-sign up Lambda trigger from Cognito. While signing up, the face detection data will go and update the DynamoDB.

Step 4 – The sign in process(See step 5 to 9).

Step 5 to 9 - This is how custom authentication flow works. It defines, creates and verifies an auth challenge.

In the verifying process, we can get the user's face detection data from DynamoDB which was stored while signing up. Finally we can compare these values and get a decision if the user is the same or not. If it's the same, we can return successful authentication flag with a new token. If it’s not the same we can throw an error message.

The face detection component

As a prerequisite, it is required to install a a few modules.

Firstly install the following module to view the webcam,

npm i --save react-webcam

Then we need to install facemesh modules,

This is required to use other Tensorflow modulestensorflow/tfjs

tensorflow-models/facemesh – This is used to detect faces and get face values

npm i --save tensorflow/tfjs

npm i --save tensorflow-models/facemesh

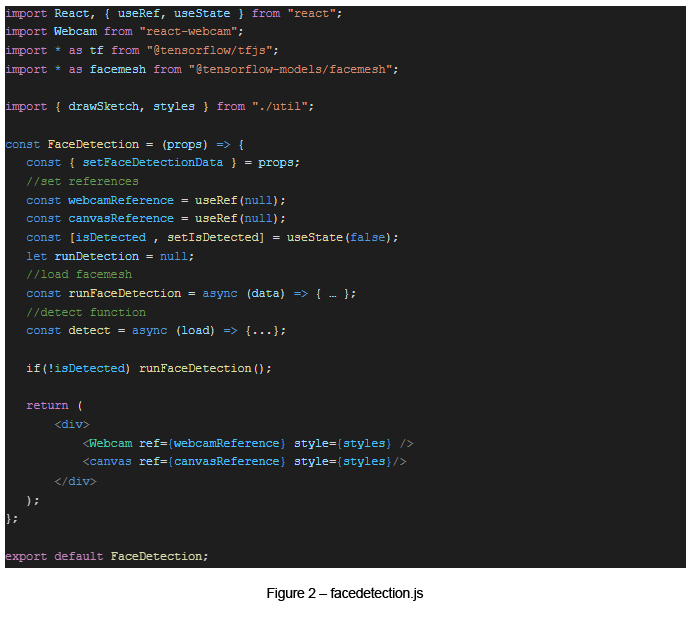

Before face detection, we can use a canvas to draw sketches (using the facemesh module) and add some text to our webcam view. The video details can be obtained via these canvas details. This can be done by using useRef and useState ReactJS hooks (See Figure 2).

Using the facemesh module

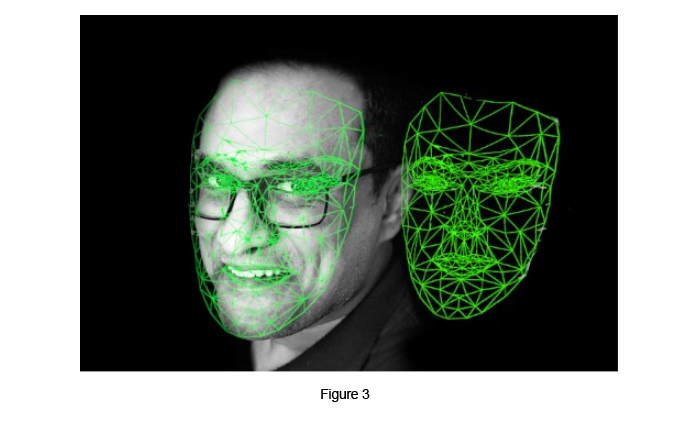

In this component, we are not going to capture and store user images in real time. Instead, we are going to draw a sketch like in figure 3 using the facemesh module in tensorflow.js with the help of face detection data.

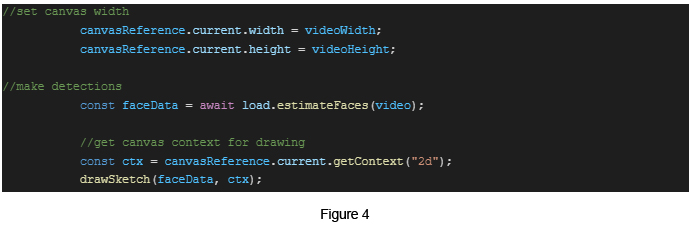

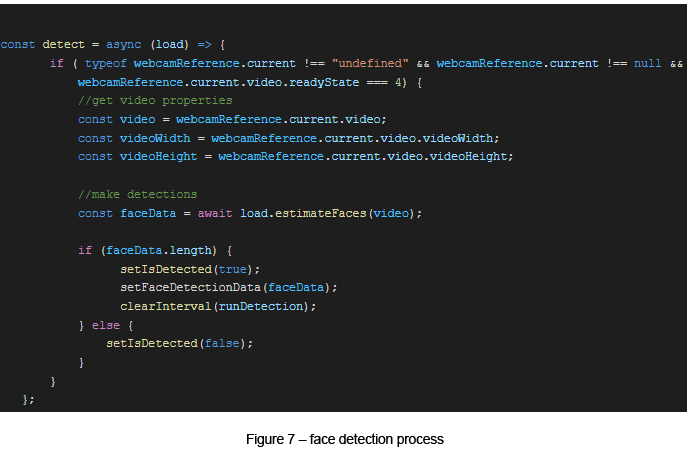

We can execute all this logic if the webcam data is present. We need to pass the current video to the facemesh module to obtain the face detection data.

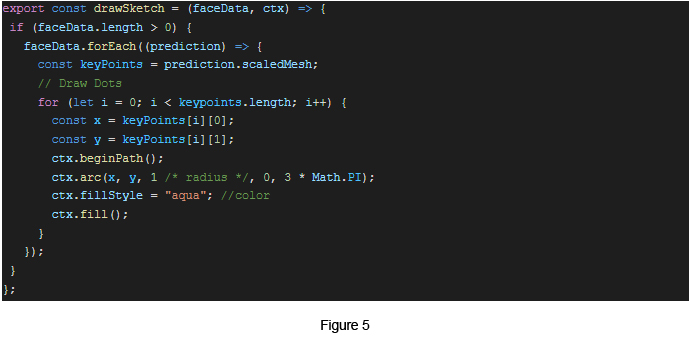

If you need to sketch face data, you can create a function for that. In our case we used a new file and a new function (drawSketch). Also need to update the detect function using the following lines.

And this is our drawSketch function

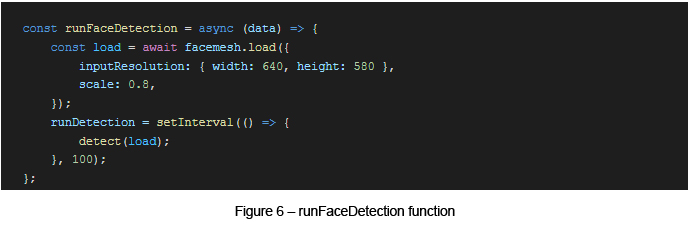

Once the facemesh sketch is completed, it is required to load the facemesh to detect the face. We can create a runFaceDetection() function for this task.

Within the runFaceDetection() function, we can execute face detection process (detect) in every 100ms (See Figure 7) until it clearly detects the face.

References

- www.tensorflow.org/js/models

- docs.aws.amazon.com/cognito/latest/developerguide/user-pool-lambda-challenge.html

Gayashan Galagedara

Senior Software EngineerDeveloping a Custom Audit Trail and a Notification Service for a Workflow Based Serverless Application on AWS

READ ARTICLE

Human Emotions Recognition through Facial Expressions and Sentiment Analysis for Emotionally Aware Deep Learning Models

READ ARTICLE